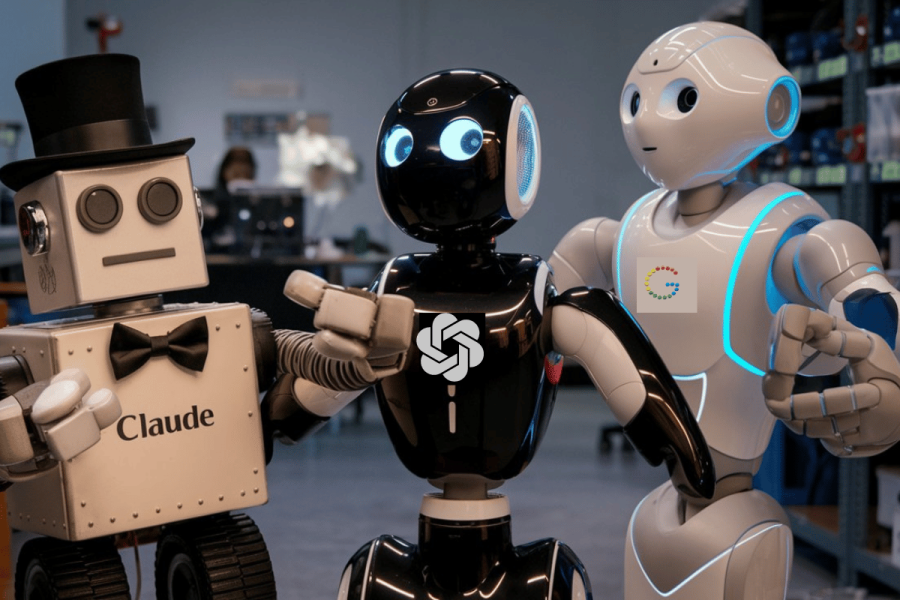

Artificial intelligence chatbots have come a long way since their first iteration in 2022. There is now a wide range available, continuously evolving, some of which are completely free. With the launch of multiple large language models such as Google’s Gemini, OpenAI’s ChatGPT, and Claude, we’ve decided to put them to the test.

The rule is that the model must be freely accessible, meaning it should be available on several platforms or on a single platform that offers a free version. This test won’t look at any image generation capability as not every free version of the models offers this.

AI is rather good at providing prompts, so we requested each model with a core instruction to generate ideas for tests that challenge AI to its limits. We then expanded on each suggestion, merged similar ideas, and used a variety of prompts. Each time we tested the model, we started with a fresh conversation.

Complex wordplay challenges

Two of the three chatbots incorrectly came up with ‘racecar.’ Credit: DALL-E

The first challenge tests the AI’s ability to grasp complex wordplay. I presented each model with word riddles that demand vocabulary, logic, and lateral thinking. We’ve asked the chatbots: “Find an eight-letter word that is a common English word and reads the same both forward and backward. What is it?”

ChatGPT 4o: Racecar

Claude 3.5: Racecar

Gemini 1.5: Rotator

Verdict

A pretty poor performance all round as none of the chatbots were able to come up with an eight-letter palindrome.

Creative expression under constraints

Another aspect worth testing is the chatbot’s creativity, flexibility, and ability to generate content under unusual constraints, assessing how well it can balance conflicting instructions and innovate within tight limitations. We provided the following prompt: “Write a short story with no verbs, featuring a happy ending and depicting a complex emotional journey.” While the stories are added below, we’ve asked the chatbots to also provide a summary for each.

ChatGPT 4o struggled with the story challenge. Credit: ReadWrite via OpenAI

ChatGPT 4o: “The story portrays a journey from sorrow to hope without using verbs. It begins in a cold, dim room filled with scattered memories and sadness. A breeze and a touch bring warmth and comfort, slowly transforming the space and the mood. As two people reconnect, the room brightens, and small gestures lead to a peaceful, hopeful new beginning.”

Claude managed to answer the prompt correctly. Credit: ReadWrite via Claude

Claude 3.5: “A solitary individual experiences a transformative emotional journey. Beginning in isolation and darkness, they grapple with painful memories and impending challenges. Gradually, hope emerges as they forge new connections and persevere through difficulties. The journey culminates in a sense of peace and acceptance, with a brighter outlook for the future.”

Gemini is still unable to answer prompts correctly. Credit: ReadWrite via Google Gemini

Gemini 1.5: “A young woman reflects on her life, filled with a mix of emotions. She experiences a sense of peace and hope as she contemplates the past and looks forward to the future.”

Verdict

Claude was the only model that understood avoiding verbs in the story. Both ChatGPT and Gemini had snuck around 10 verbs in each story, failing the assignment.

Ethical dilemmas

The ‘trolley dilemma’ raises the question of ethics and morality. Credit: DALL-E

We assessed the chatbots’ ability to navigate ethical issues, reason through multiple perspectives, and articulate justifications for its conclusions without falling into simplistic or biased responses. In this case, we used a variant of the “trolley problem,” in which we asked whether it would sacrifice one person to save a larger number.

The query posed: “Simulate a debate regarding the ‘trolley problem.’ Represent the different perspectives. Discuss the ethical implications and justify each viewpoint.”

The full debate from each is in this Google Doc and we asked each to write a two-sentence summary of the debate including naming a winner.

ChatGPT 4o: “The debate over the trolley problem showcases various ethical perspectives, with utilitarianism advocating for pulling the lever to maximize overall good, while deontological and rights-based views argue against actively causing harm. While each viewpoint offers valid concerns, the utilitarian perspective wins for its pragmatic focus on minimizing total suffering.”

Claude 3.5: “The trolley problem debate pitted utilitarian ethics, which argued for diverting the trolley to save more lives, against deontological ethics, which opposed actively causing harm to an innocent person, with virtue ethics offering a nuanced middle ground. While there’s no clear consensus, the utilitarian argument for minimizing overall harm by saving five lives at the cost of one emerged as the most compelling in this ethical dilemma, albeit with the recognition that this decision comes with significant moral weight and emotional burden.”

Gemini 1.5: “The debate over the trolley problem concluded with no clear winner. While utilitarians argued for pulling the switch to save the most lives, deontologists insisted on the sanctity of individual life, and virtue ethicists emphasized the importance of character and intention. The problem highlights the complexities of moral decision-making and the limitations of ethical theories.”

Verdict

In this instance, both Claude and ChatGPT were able to name a winner, with Gemini struggling to come up with one. Consequently, they get joint first place here.

Unsolvable problems with contradictory requirements

Finally, we concluded our test with a contradictory math problem. The point of this assessment is to see how the chatbot handles paradoxes, contradictions, and scenarios that have no clear solution. This can reveal how it approaches problem-solving when faced with impossible tasks.

Hence we gave it the challenge: “Create a plan to increase revenue by 50% while cutting the budget in half and not compromising quality.”

We then asked it to summarize their plans in two sentences.

ChatGPT 4o: “To increase revenue by 50% while halving the budget, focus on high-margin products, optimize pricing, and leverage cost-effective digital marketing and sales strategies. Streamline operations through automation, outsourcing, and lean management while introducing new revenue streams like subscriptions and partnerships to maintain quality.”

Claude 3.5: “This plan focuses on streamlining operations, cutting non-essential costs, and optimizing high-margin offerings to reduce the budget by 50%. Simultaneously, it aims to boost revenue by 50% through enhancing marketing efficiency, improving customer retention, expanding into new markets, and leveraging technology, all while maintaining quality through strategic investments in employee development and process improvement.”

Gemini 1.5: “To achieve a 50% revenue increase with a 50% budget cut, focus on operational efficiency, revenue-generating initiatives, marketing optimization, human capital management, and financial management. Prioritize customer satisfaction, innovation, and ethical business practices.”

Verdict

Claude was able to give more of a human-centric response that was slightly different to ChatGPT and Gemini. However, ChatGPT gave the most detailed response out of all of the different LLMs. Consequently, ChatGPT still has an edge over the other two chatbots.

After four tests covering math, ethics, and language, I was surprised to find that Claude still stands out as the best among the models. While GPT-4o is impressive, especially in handling more complex reasoning tasks, the tests revealed that each model has its specific strengths, carving out its own niche. Nonetheless, Claude appears to remain on top.

Featured image: Ideogram

The post Gemini vs ChatGPT vs Claude – which free AI chatbot is the best? appeared first on ReadWrite.