OneTrainer vs Kohya training. Moreover, comparative study of Masked Training effect. Full research.

As some of you know, I have been doing huge research on OneTrainer recently to prepare the very best Stable Diffusion training. For this purpose, I have been for days working on the effect of masked training feature of the OneTrainer.

Join discord to get help, chat, discuss and also tell me your discord username to get your special rank : SECourses Discord

So today, after doing research, I have completed more than 10 trainings and compared them. I also would like to get your ideas.

The major upcoming tutorial video not ready yet but will be on SECourses hopefully for free and I will show and explain everything including training configuration and parameters.

So stay subscribed and open notification bells to not miss : https://www.youtube.com/SECourses

For this research, I used our very best configurations.

You can download Kohya configs from here : https://www.patreon.com/posts/very-best-for-of-89213064

You can download OneTrainer presets from here : https://www.patreon.com/posts/96028218

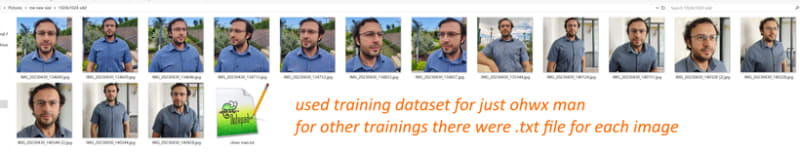

I used my below bad dataset for training. It is bad because it is easy to collect and i am able to compare with my previous trainings. But hopefully I will improve it and explain to everyone. The used caption during training is “ohwx man”

When doing Kohya DreamBooth training, our ground truth manually collected 5200 man regularization images dataset used with caption of “man”.

You can download raw and preprocessed this amazing dataset here : https://www.patreon.com/posts/massive-4k-woman-87700469

Preparation of this regularization images dataset took me few weeks.

When training with kohya, train images repeating was 150 and trained for 1 epoch.

You can see how to use these Kohya configurations at this video: https://youtu.be/EEV8RPohsbw

When doing OneTrainer training, I added a second concept and used it as a regularization images. OneTrainer don’t have DreamBooth directly so we are trying to mimic the same effect this way.

OneTrainer trained for 150 epoch.

So total trainings were 4500 steps for all of the experiments. Half of it trains regularization images and half trains training images.

If you want to see how to load preset and train with OneTrainer here a quick video : https://youtu.be/yPOadldf6bI

I have done the trainings on MassedCompute. We have prepared an amazing template on MassedCompute that comes with preinstalled and 1 click launchers for Automatic1111 Web UI, OneTrainer and Kohya currently.

Also the template has Python 3.10.13 installed and set as default. Template also includes Hugging Face upload notebook and preinstalled Jupyterlab.

Moreover, OneTrainer gave us a coupon code and thus by following the instructions in below GitHub readme file, you can use A6000 GPU on MassedCompute for only 31 cents per hour.

MassedCompute full instructions : https://github.com/FurkanGozukara/Stable-Diffusion/blob/main/Tutorials/OneTrainer-Master-SD-1_5-SDXL-Windows-Cloud-Tutorial.md

As a base model for training RealVis XL 4 is used. So this training made on a realistic model. Therefore, the capability of the model to generated cartoon or similar images is limited. But at realism it excels.

When testing OneTrainer masking feature I have followed the following steps.

Masks are only used for training images not for regularization images.

For masking I have used DataSet tools and masked them like below

Then I have done 9 different trainings and compared Unmasked Weight. If you make Unmasked Weight 0.0 that means only the masked area which is head will be trained.

If you make Unmasked Weight 1.0, it will be same as no mask is used.

So I compared Unmasked Weight between 0.1 and 0.9. I didn’t include 0.1 results since it was generating images with anatomically disproportional body.

I also want you to analyze the images and tell me which Unmasked Weight is working best? I think 0.6 or 0.7 is best. Reduces some overtraining and still able to generate accurate anatomy having images.

As you reduce the Unmasked Weight, you reduce the overtraining caused by the environment. Repeating background and clothing.

You can download all images (total 24 tests) full sizes, including their PNG info and the used full prompts here : click to download 1.5GB

Here below the images and their prompts but they are extremely downscaled by the platform.

The images are not cherry picked so many times better and perfect images can be generated easily.

You can download and see test full png info in this file : test_prompts.txt

Download .jpg files to see bigger size.