In today’s evolving technological landscape, companies continually assess their infrastructure choices to adapt to changing needs. For Woovi, having the ability to rapidly test-run ideas, maintain full control of our infrastructure, and yet not overspend was the driving force behind this decision. In this article, we’ll delve into Woovi’s transition and explore the motivations, challenges, and outcomes of transitioning out of the cloud.

Motivations and Aspirations

Our motivations for transitioning our infrastructure stemmed from several key factors. Firstly, We aimed to reduce costs significantly while simultaneously enhancing the quality of our technological environment, which seemed almost too good to be true, but at the same time we wanted also a higher standard of infrastructure performance, faster database operations and application responsiveness, better and faster loging and debugging, we wanted it all.

AWS Cloud stack

We had a very straightforward setup on AWS; however, its performance was not particularly fast. As a result, any heavy data processing job could significantly slow us down.

EKS

It was our kubernetes service on aws, 5 t3.2xlarge nodes, performance was ok but not great. EBS for block storage as some of our data services ran on k8s.

S3

Object storage for assets.

ECR

ECR was our container registry, ECR is great in general.

Mongodb

E nodes replica set on k8s, 1 hidden replica for analytics and backups.

Redis

1 node on k8s.

Elasticsearch

1 Node running on k8s, we also ran kibana and APM integrations.

CI/CD

Github and Circleci, this part of the migration was so important and complex that we will have a separate series just on CI/CD.

Woovi Bare Metal Stack

Data center

We chose a datacenter in São Paulo with colocation services. You bring the servers they take care of it, they offer internet redundancy, generators, coolling and 24/7 support.

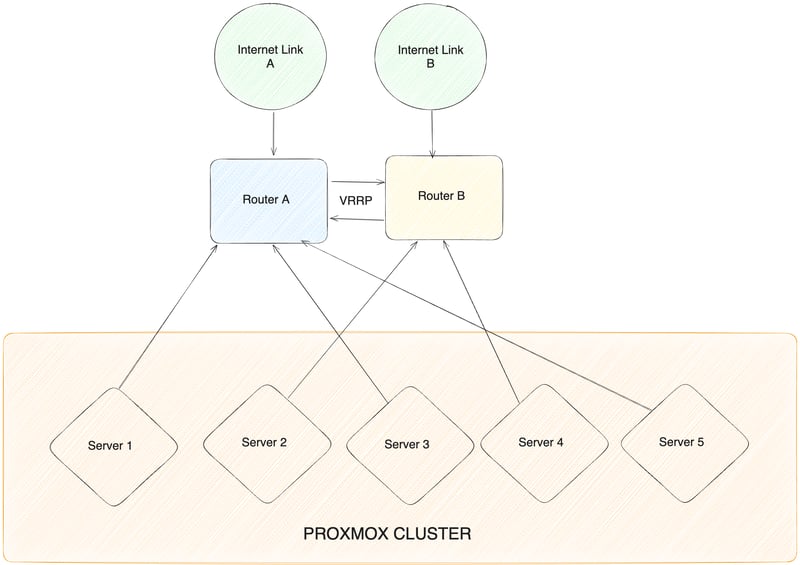

Network

Mikrotik routers, two of them, we wanted redundancy on the network layer. We will talk more a bout how we achieve that later. We have internal DNS services for service discovery and other network services we will soon talk more about. We also have a VRRP(Virtual Router Redundancy Protocol) setup on our Mikrotik for redundancy.

Internet

Two internet direct link providers.

Servers

Proxmox was our hypervisor solution due to its comprehensive feature set, providing us with the necessary flexibility. As a small team responsible for managing our infrastructure, Proxmox aligns perfectly with our requirements. Moreover, it’s a familiar tool for our team.

Our Staging environment is hosted on a Dell T550, we run a small version of each service we have on production.

In our production environment we run 5 Dell R750.

Kubernets

We chose microk8s for its lightweight nature and comprehensive feature set. We sought simplicity, with all Kubernetes applications running in our cluster being stateless, prioritizing performance.

Mongodb

3 replicasets runnign in separate servers, on LXC containers managed by Proxmox, for disk we use 1.5TB of ssd space, CPU 15 cores and RAM 48gb. Mongodb is quite havy on memory, our focus is performance but we put a lot of effort on backup strategies.

Redis

One node running on a managed container, we have plans to expand this service but for now works great for us.

Observability

We migrated our ELK stack from 7 to 8, integrated over Elastic Agents and now we plan to expand observability using Logstash and Beats to improve Application observability.

We also added Prometheus/Grafana to our stack, we want to evolve our kubernetes observability as we scale.

For network and hardware monitoring, we have a Zabbix Server running on an LXC container.

There is a lot of overlap on those solutions, we wanted to test most of them and see what is the best suited for our needs, in the next months we will have a better understanding of those tools and this might change.

CI/CD

We created a full CI/CD platform with Tekton and ArgoCD, we will discuss in detail in its own series Cloud Native CI/CD.

Registry

We have implemented a fully managed registry using Harbor, which serves as a repository for Docker images and Helm Charts. It is seamlessly integrated into our CI/CD pipelines and Kubernetes environment. Additionally, Harbor offers the ability to integrate with Static Analysis and vulnerability tools such as Clair and Trivy.

Tools

For provisioning we use ansible, this is part of an effort to follow IaC(Infrastructure as Code) paradigm, keep a good level of visibility as we scale.

Overview

Migration

The migration was simple:

1 – Cut access to the platform using cloudflare.

2 – Scale down workers on EKS

3 – Scale down servers on EKS

4 – Backup disks

5 – Wait for replication on Mongodb Cluster

6 – Scale up Services on new infrastructure

7 – Clear access on cloudflare to new infrastructure

8 – Test absolutely everything!

!Done

The most challenging aspect of the migration was the database transition. To address this, we created proxy containers for our new replicas and incorporated them as hidden replicas within our EKS cluster. Over the course of a week-long testing period, we achieved nearly perfect replication with minimal lag. This success instilled confidence in us, assuring that downtime would be kept to a minimum, we encountered some hiccups during the migration process, particularly with outdated images on our self-hosted registry, causing a few services to be off by approximately 10 minutes. Despite these challenges, we successfully migrated the entire infrastructure in just 17 minutes.

First Week Impressions

Everything seems too fast, this was the first week we fixed bugs that only occured because our database is so much faster than before. Responsiveness on our platform, queries, observability, tooling, all we had before became much faster.

Our CI/CD is currently broken with deploys being perform manually, but we will have in no time fully integrated.

Cost was, as expected, a big win, our AWS bill range from 50k to 100k BRL before.

We will share more in depth artciles about aspects of the migration as we improove our infrastructure, if you want to help…

We are hiring!

Photo by Filipe Dos Santos Mendes on Unsplash