Change is the only constant in our universe, and by default, it leads to increased disorder. To function as a society, we have learned to transform resources in the opposite direction using energy, and one of these resources is data.

Data is, by definition, raw, and to be most useful, it must be processed. The operations used to accomplish this are referred to as transformations because they change the state of the data to make it more useful for decision-making and analysis. Traditional data transformation techniques have included everything from statistical techniques to regex queries, which are sometimes built into full-fledged applications. These techniques, however, are limited in terms of the types of data they can handle and the effort required to use them. To overcome these constraints, machine learning has taken its place among data transformation tools.

Machine learning is the process of teaching programs, also known as models, to perform tasks through iterative exposure to data samples. Two popular methods involve exposing the model to input data in unsupervised learning or to both the input data and the corresponding target output in supervised learning. In either case, the goal is to efficiently train the model to learn the transformation operation and process data in a way that makes it more useful.

This article discusses how machine learning can be used to transform data. You will understand the benefits of doing so, as well as any challenges you may encounter along the way. You will also see case studies of machine learning applications in various industries and organisations.

Basics of Data Transformation

Data transformation is the process of modifying data to make it more useful for decision-making. It is fundamental to the concept of data processing because data must change to progress from its raw state to what is considered useful information. It must be transformed. For example, consider a journalist who has recorded an interview with a person of interest and plans to publish an article. This recording contains raw data that they have gathered and can most likely be shared directly; however, to publish an article worth the public’s attention, they must transform that back-and-forth communication into a compelling and true story that reflects the core message of the interview. However tedious this may be, it must be done.

One method for transforming data is to use statistical techniques such as measures of central tendencies, which attempt to summarize a set of data about a specific quantity. Some examples of this are the mean, median, and mode. More advanced techniques could include using fast Fourier transforms to convert audio data from the time domain to the frequency domain in the form of spectrograms. Another interesting data transformation operation is matrix operations, which are commonly used on images during resizing, color conversion, splitting, and so on. These operations convert images into more useful forms for you. On text data, data transformation may involve identifying and extracting patterns using regular expressions (Regex). These patterns typically have to be configured carefully to ensure that all the desired information is extracted and edge cases are taken care of.

Limitations of Traditional Data Transformation Operations

While these data transformation operations are useful, their applicability is limited. Traditional data transformation techniques, for example, can be used to change the size and color of an image but not to identify the objects in it or their location. Similarly, while audio data can be transformed into spectrograms, traditional techniques cannot be used to identify the speaker or transcribe the speech.

Using these techniques also requires a significant amount of effort in many cases. For example, a regex query designed to extract information from text data may necessitate manually reviewing hundreds of samples and dealing with numerous edge cases to ensure that you have developed a pattern that works consistently. Even so, a new sample may present a previously unconsidered edge case.

The learning curve for these data transformation techniques may also be steep. As a result, you may find yourself in a situation where you must spend engineering hours learning how to use a new tool before encountering the limitations that come with it.

As an ingenious species, we found ways to get by despite our limited technology. However, we also invested resources in research to find other ways to ease our burdens. As a result, the popularity of machine learning tools has grown over the last two decades.

The Role of Machine Learning in Data Transformation

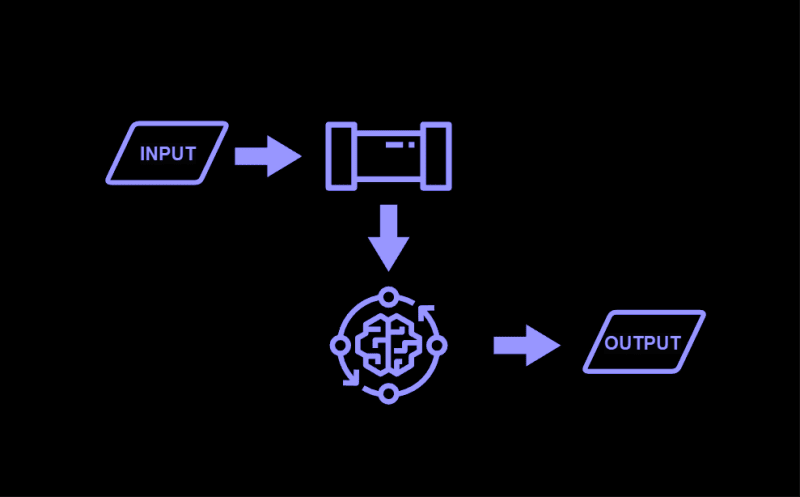

Machine learning models accept input and process it to generate useful outputs for you. In this sense, they are fundamentally data transformation tools trained to learn a transformation function that achieves a specific goal. When you send a prompt to a language model, such as GPT3.5, it performs a transformation operation to produce an output that satisfies your query.

As a data transformation tool, machine learning opens up a world of possibilities. Many previously unfeasible data transformation tasks are now very possible. With a new goal in mind, the only constraints on developing a system that learns a custom transformation are the availability of data and computing resources. However, in many cases, APIs and open-source models are available to assist you in achieving your data transformation objectives without requiring you to build your own from scratch. If necessary, you can fine-tune an existing model to make it more suitable for your use case. In either case, your task becomes significantly easier to automate.

Whisper, an OpenAI open-sourced model, is a good example of a model like this and is one of the best models for speech transcription. The journalist in the previous example, who may have recorded an interview, can now easily convert that content into text using this model. Another interesting model for data transformation is YOLOv8 (You Only Look Once). This model is part of a family of models that help you detect and locate objects in images. YOLOv8 is also useful for segmentation, pose estimation, and tracking. Another fascinating application of machine learning in data transformation is the creation of embeddings. These are compressed representations of your data (text, image, or audio) that can be used for tasks such as search, recommendation, classification, and so on. For text data, there are a variety of pre-trained models and tools available to assist with transformations such as translation, intent detection, entity detection, summarization, and question answering.

While these models’ capabilities are limited, you can fine-tune them to work for your use case after curating a corresponding dataset. This dataset will also not need to be as large as the one required for training from scratch.

Benefits of Automating Data Transformation with Machine Learning

Some benefits of augmenting or replacing traditional data transformation operations with machine-learning-powered ones are discussed below.

Increased Scope of Operation: Data transformation has vast applications in almost every digital industry. However, machine learning broadens the scope of data transformation and automation to include industries and tasks that would normally require human intervention.

Improved Efficiency: Using machine learning in data transformations allows you to automate complex tasks with a reasonable level of accuracy. It increases the efficiency of your operations by allowing you to complete human-level tasks faster and, in some cases, with comparable accuracy.

Reduction in Manual Labor and Human Error: Humans perform a great deal of repetitive work that has long been outside the scope of automation and traditional data transformation processes. Some examples include audio/video transcription, language translation, and object detection. Machine learning uses quality data gathered from humans completing such tasks to teach programs to do the same. This frees you up to perform tasks that are more difficult and beyond the scope of your automation tools. It also reduces the likelihood of human error, especially in the long term. This is because machine learning only requires you to consider the possibility of human errors in the data used to train a model. Following that, there is a much lower chance of human error because the models are more likely to perform as expected and assist you in achieving your goal.

Real-time data transformation capabilities: Because many data transformation tasks are complex, humans may need to analyze data in batches and then return the results. This is not always feasible, and it is rarely the best option. However, you can automate and process data in real time by leveraging machine learning transformations.

Innovative Solutions in Data Transformation: The Case of GlassFlow

A key part of automating data transformation with machine learning is how you build the systems that make them work. GlassFlow is a platform that removes bottlenecks in the development of data streaming pipelines. It simplifies and accelerates the pipeline building and deployment process for you by abstracting complex technology setup and decision-making. Thus, it allows you to concentrate on ensuring that the functional components of your data pipelines perform as expected.

One significant benefit of GlassFlow’s offering is that you no longer have to worry about scaling issues. Regardless of the number of instances required to handle your production workload, GlassFlow is built with powerful tools like Kubernetes and NATS to help you manage them. Particularly machine learning data transformations, which can be computationally intensive.

GlassFlow’s Python-based pipelines are a natural fit for you, as Python is the most popular language for modern data workflows. Once you have installed their package, you can quickly begin building, testing, and deploying pipelines. You can define your applications as Python functions and deploy them with simple but effective commands.

GlassFlow’s setup is also serverless. This means you will not have to worry about provisioning and managing machines in the cloud, which can be extremely stressful, especially with machine learning workflows. GlassFlow also manages updates and upgrades for you. This relieves the burden of maintenance and allows you to devote your engineering hours to more challenging tasks. You can also be confident that there will be no data loss and that your pipeline will be operational at least 99.95 percent of the time. You also have access to a human customer support team that is available to assist you whenever you require it.

All of this is provided through a transparent pricing system in which you only pay for the services you use if the extremely generous free tier does not work for you.

Case Studies

Organisations are already using machine learning in a variety of industries to automate and augment their existing workflows. One of these industries is manufacturing, which employs machine learning to detect defective products, monitor workers for safety compliance, manage inventory, and perform predictive maintenance. Machine learning is also used in the healthcare industry to speed up diagnostics by analyzing medical images, as well as to determine whether patients are at risk of certain conditions by processing their data and recommending preventive measures. The e-commerce industry incorporates machine learning into its recommendation systems. They collect user data and, using the models they create, select corresponding products that users might be interested in. Ad recommendation systems and social media platforms use a similar technology. Machine learning transformations are also used in the finance industry to detect fraudulent transactions and estimate credit scores.

Two interesting organisations that use machine learning are discussed below.

Airbus

Airbus manufactures aircraft, ranging from passenger planes to fighter jets, to pioneer sustainable aerospace. Airbus has leveraged machine learning to improve its operations in a variety of ways. For example, as a decades-old company, Airbus needed a way to search through its vast amount of data to find information about an aircraft, airline, and so on. They accomplished this by using Curiosity, a search application that extracts knowledge from both structured and unstructured documents using natural language processing.

On another front, Airbus employs machine learning to analyze telemetry data from the International Space Station. They, in particular, ensure the continued operation of the Columbus module, a laboratory on the International Space Station, as well as the health of the astronauts on board, by sending thousands of telemetry data points to Earth for analysis. In the past, operators manually reviewed the data to identify anomalies, which were then fixed by engineers. However, the volume of data being processed, as well as the cost of human errors, necessitated a shift to an automated approach. This involved training models using anomaly detection techniques which reduced the need for an additional human in the loop and increased process efficiency.

Bosch

Bosch is a manufacturing company that makes a variety of household appliances and power tools. When products are created, they are usually manually inspected for defects by humans. This step is critical because detecting flaws early in the supply chain is the most cost-effective approach. To make this process more efficient, Bosch developed the Visual Quality Inspector (VQI), which uses computer vision to automate the quality inspection process.

In detail, Bosch takes in data using visual sensors such as cameras and then preprocesses it using a variety of data transformation operations before passing it to machine learning models. These models transform the data from its image-like state to a target value that can be used to determine whether or not a defect exists. This triggers an alert to the appropriate personnel, who handles it from there. As a result, they used machine learning to automate a task that traditional data transformation tools would normally overlook.

Challenges and Considerations

While you will agree that machine learning techniques improve your data transformation pipelines in numerous ways, as with any other technology, there are some drawbacks to consider when using them. Some of these are discussed below.

Complexity of implementation: There are numerous highly accurate and pre-trained machine learning models available for use in a wide range of applications. Many of them are ready to use right away, either directly or via an API. However, you may need to fine-tune these models to handle your specific transformation task or, in some cases, create your own from scratch. Even with pre-trained models, you must carefully consider how to package them for deployment in a way that meets your latency requirements. The process of implementing these systems can be non-trivial.

Data privacy and security: The concept of privacy and security is fundamentally about handling data so that only authorized individuals have access to or use it. As a result, it comes as no surprise that it is critical in data transformation, especially for machine learning-powered systems that may require massive amounts of data to train models. Ensuring the privacy and security of those who own the data you are using is not only a technical challenge but also a legal one. You must consider the laws that govern your industry and location when deciding how to process the data you collect from your users and/or the internet as a whole.

The need for skilled professionals in ML technologies: Based on the challenges discussed thus far, you must agree that the people who implement these ML data transformations must have some domain knowledge in the field, the depth of which varies depending on whether they are simply using APIs or training models themselves. This can be difficult because it requires engineering hours to upskill or additional costs to hire skilled professionals. As a result, you must consider the long-term benefits of using ML technology and make the best decision for your company.

Ethical use of machine learning in data processing: Almost every machine learning technique has the potential to infringe on the rights of others or manipulate them. As a result, ethics has long been a source of contention in the machine-learning industry. So, you must critically consider the ethical implications of applying machine learning to your industry and use case.

Conclusion

In this article, you learned about data transformation and how to improve it with machine learning. You have learned about the benefits of doing this, as well as some of the challenges and considerations that come with it. You also read case studies about organisations that use machine learning models as data transformation tools.

The selling point of machine learning is that it automates tasks that would otherwise require a lot of work hours or be done inefficiently with other tools. And as time goes by, these models keep getting better. So you should be looking in this direction because, as in many industries, it has the potential to transform how you process data and make your systems more efficient. As they say, the future is data-driven.

GlassFlow assists you in getting on board the data-driven train by preventing you from wasting valuable engineering time on data engineering tooling and setups. Instead, it offers a serverless, Python-based data streaming pipeline that allows you to get started quickly, from development to deployment. Hop on to the GlassFlow pipeline by joining their waitlist today.