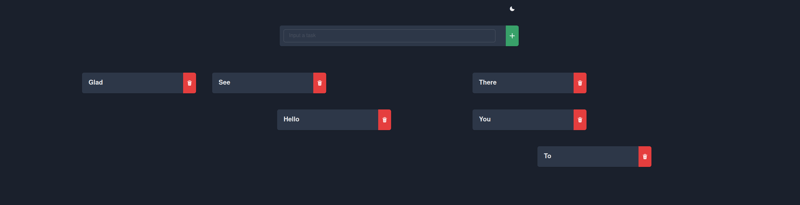

Some time has passed since the last article, during which time I slightly changed the layout of the app to this.

Added drag and drop and drag scroll. The changes can be seen here.

Now it’s time to add voice control.

So, to begin with, there will be only two voice control commands, these are Add and Delete.

An example scenario

A user says Add and then describes the task with voice, after he finishes speaking, the task creates.

When a user says Delete and proceed with the task description, that task is deleted.

But it’s quite tedious to pronounce the full description. So we will look for the task that most matches the description and if we find it, delete it. And if not, then display a notification? I don’t know yet, we’ll see.

First, let’s look at WebSpeechApi. Specifically with its SpeechRecognition module.

It allows us to recognize the user’s words quite easily. you just need to create an instance of SpeechRecognition something like this.

useEffect(() => {

recognition.current = new (window.SpeechRecognition || window.webkitSpeechRecognition)()

}, [])

Further, to start listening you just need to call the start method and to finish the end method.

There are also some callback functions we will need only two of them onend and onresult.

First try

Create a hook to separate the commands listening logic from the application logic.

src/hooks/useSpeechCommand.ts

interface Parameters {

listen: boolean;

command: string;

timeout?: number;

onEnd?: (value: string) => void;

}

export const useSpeechCommand = ({ listen = false, command = ”, timeout = 3000, onEnd = () => { } }: Parameters): [string, boolean] => {

const recognition = useRef<SpeechRecognition>()

const [commandTriggered, setCommandTriggered] = useState(false);

const [value, setValue] = useState(”);

const timerID = useRef<NodeJS.Timeout>()

const innerValue = useRef(”)

useEffect(() => {

if (window.SpeechRecognition || window.webkitSpeechRecognition) {

recognition.current = new (window.SpeechRecognition || window.webkitSpeechRecognition)()

}

if (!recognition.current) {

return

}

recognition.current.continuous = true

recognition.current.interimResults = true

recognition.current.maxAlternatives = 1

recognition.current.lang = ‘en-EN‘

}, [])

useEffect(() => {

if (!recognition.current) {

return

}

if (listen) {

recognition.current.start()

} else {

recognition.current.stop()

}

recognition.current.onend = (event) => {

setCommandTriggered(false)

if (innerValue.current) {

onEnd(innerValue.current)

}

if (timerID.current) {

clearTimeout(timerID.current)

}

if (listen) {

recognition.current?.start?.()

}

}

recognition.current.onresult = function (event) {

let result = ”;

for (let i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

result += event.results[i][0].transcript + ‘ ‘;

} else {

result += event.results[i][0].transcript;

}

}

if (!commandTriggered && result.toLowerCase().includes(command)) {

setCommandTriggered(true)

const commandRegExp = new RegExp(command, ‘gi‘)

result = result.replace(commandRegExp, ”);

setValue(result)

}

if (commandTriggered) {

setValue(result)

}

};

return () => {

if (recognition.current) {

recognition.current.stop()

recognition.current.onend = null

recognition.current.onresult = null

}

}

}, [listen])

useEffect(() => {

innerValue.current = value

if (timerID.current) {

clearTimeout(timerID.current)

}

if (value) {

timerID.current = setTimeout(() => {

timerID.current = undefined

recognition.current?.stop()

}, timeout)

}

}, [value])

return [value, commandTriggered]

}

It turns out to be quite long, but in reality nothing too complicated is going on here. We listen to what is said and try to find the command, if we find it we set its flag to true and listen further, when the user has finished we wait for timeout and call onEnd with recognized text. Chrome browser also finishes listening after some time, but it is usually too long.

The little bad things

Now if we put this hook in TaskCreator and in TaskList for adding and deleting respectively, nothing will work. It seems that browsers do not allow to use two instances of WebSpeechAPI at the same time. So to achieve the desired result you should use only one instance. For this purpose I will use a context that will contain a hook, and return methods for subscribing and unsubscribing to the commands.

Move further

First of all, let’s delete the hook, as it will be inconvenient to work with it further and move its contents to a separate class.

src/libs/SpeechCommands.ts

class SpeechCommands {

private recognition: SpeechRecognition

private commands: Record<string, boolean>

private results: Record<string, string>

private timers: Record<string, NodeJS.Timeout>

private lang: string

public recording = false

public onEnd: (e: CommandEvent) => void

public onResult: (e: CommandEvent) => void

constructor(public continuous: boolean, public timeout: number) {

this.recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)()

this.commands = {}

this.results = {}

this.timers = {}

this.lang = ‘en-EN‘

this.onEnd = () => { }

this.onResult = () => { }

this.recognition.onend = this.onEndHandler.bind(this)

this.recognition.onresult = this.onResultHandler.bind(this)

}

start(lang: string) {

this.lang = lang ?? this.lang

this.recognition.continuous = true

this.recognition.interimResults = true

this.recognition.maxAlternatives = 1

this.recognition.lang = this.lang

this.recognition.start()

this.recording = true

}

stop() {

this.recognition.stop()

this.recording = false

}

private onEndHandler() {

for (const [command, triggered] of Object.entries(this.commands)) {

if(triggered) {

this.onEnd({ command, value: this.results[command] })

}

this.commands[command] = false

this.results[command] = ”

if (this.timers[command]) {

clearTimeout(this.timers[command])

delete this.timers[command]

}

}

if (this.continuous) {

this.recognition.start()

}

}

private onResultHandler(event: SpeechRecognitionEvent) {

let result = ”;

for (let i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

result += event.results[i][0].transcript + ‘ ‘;

} else {

result += event.results[i][0].transcript;

}

}

for (const [command, triggered] of Object.entries(this.commands)) {

if (result.toLowerCase().includes(command)) {

this.commands[command] = true

const commandRegExp = new RegExp(command, ‘gi‘)

this.results[command] = result.replace(commandRegExp, ”);

this.onResult({ command, value: this.results[command] })

}

if (triggered) {

const commandRegExp = new RegExp(command, ‘gi‘)

this.results[command] = result.replace(commandRegExp, ”);

this.onResult({ command, value: this.results[command] })

if (this.timers[command]) {

clearTimeout(this.timers[command])

}

this.timers[command] = setTimeout(() => {

this.onEnd({ command, value: this.results[command] })

delete this.timers[command]

this.commands[command] = false

this.results[command] = ”

}, this.timeout)

} else {

this.results[command] = ”

}

}

}

addCommand(command: string) {

this.commands[command] = this.commands[command] || false

}

removeCommand(command: string) {

delete this.commands[command]

}

}

export default SpeechCommands

Now let’s create a context that will use this class and provide the listen method to anyone who wants to use it.

src/contexts/SpeechCommandContext.tsx

import { createContext, useCallback, useContext, useEffect, useRef } from ‘react‘;

type SpeechCommandListener = (value: string) => void

type SpeechEvents = ‘result‘ | ‘end‘

const initialValue = {

listen: (type: SpeechEvents, command: string, listener: SpeechCommandListener) => () => { },

}

const SpeechCommandContext = createContext(initialValue);

const SpeechCommandProvider: React.FC<React.PropsWithChildren> = ({ children }) => {

const commander = useRef<SpeechCommands>()

const listeners = useRef<Record<SpeechEvents, Record<string, SpeechCommandListener[]>>>({

result: {},

end: {}

})

const listen = useRef((type: SpeechEvents, command: string, listener: SpeechCommandListener) => {

if (listeners.current[type][command]) {

listeners.current[type][command].push(listener)

} else {

listeners.current[type][command] = [listener]

}

if (!commander.current?.recording) {

commander.current?.start(‘en-EN‘)

}

commander.current?.addCommand?.(command)

return () => {

if (listeners.current[type][command]) {

const idx = listeners.current[type][command].findIndex((l) => l === listener)

if (idx !== –1) {

listeners.current[type][command].splice(idx, 1)

}

}

if (listeners.current[type][command].length == 0) {

commander.current?.removeCommand?.(command)

}

}

})

const onEndHandler = useCallback(({ command, value }: CommandEvent) => {

listeners.current[‘end‘][command].forEach((listener) => {

listener(value)

})

}, [])

const onResultHandler = useCallback(({ command, value }: CommandEvent) => {

listeners.current[‘result‘][command].forEach((listener) => {

listener(value)

})

}, [])

useEffect(() => {

if (!commander.current) {

commander.current = new SpeechCommands(true, 3000)

}

}, [])

useEffect(() => {

if (commander.current) {

commander.current.onEnd = onEndHandler

}

}, [onEndHandler])

useEffect(() => {

if (commander.current) {

commander.current.onResult = onResultHandler

}

}, [onResultHandler])

return (

<SpeechCommandContext.Provider value={{ listen: listen.current }}>

{children}

</SpeechCommandContext.Provider>

)

}

const useSpeechCommandContext = () => useContext(SpeechCommandContext)

export { SpeechCommandContext, SpeechCommandProvider, useSpeechCommandContext }

And now we use it in the CreateTask component to create a task, like this.

src/components/TaskCreator.tsx

const { listen } = useSpeechCommandContext()

const onEnd = useRef((value: string) => {

createTask(value).then((task) => {

refresh([…tasks, task])

}).catch(() => {

toast({

title: ‘Error on creating a task‘,

status: ‘error‘

})

})

setTaskName(”)

})

const onResult = useRef((value: string) => {

setTaskName(value)

})

useEffect(() => {

const removeEndListener = listen(‘end‘, ‘add‘, onEnd.current)

const removeResultListener = listen(‘result‘, ‘add‘, onResult.current)

return () => {

removeEndListener()

removeResultListener()

}

}, [])

…

Finally you can try to create tasks by voice, say Add and keep saying the task, 3 seconds after the end of the phrase the task will be created.

Try to create

Well, it’s a little hard to say so that the recognition is correct. Maybe it’s because English is not my native language and I speak with a terrible accent. But here I said “add – buy a milk.” It’s funny how it’s recognized the other way around.

Delete what we’ve created

Let’s add the ability to delete a task by its name. It is worth saying that for simplicity, it is arranged in such a way that if we say the delete command, something will be deleted for sure. To find the most similar name we use Levenshtein distance.

npm i –save levenshtein-edit-distance

“`tsx

…

const tasksRef = useRef<TaskListType>([]);

const { listen } = useSpeechCommandContext()

const onEnd = useRef((value: string) => {

let minDstId

let minDst

for(let i = 0; i < tasksRef.current.length; i++) {

const dst = levenshteinEditDistance(value, tasksRef.current[i].name)

if(dst === 0) {

minDstId = tasksRef.current[i].id

break

}

if(!minDst || dst < minDst) {

minDst = dst

minDstId = tasksRef.current[i].id

}

}

if(minDstId) {

handleDeleteTask(minDstId)

}

})

useEffect(() => {

const removeEndListener = listen(‘end’, ‘delete’, onEnd.current)

return () => {

removeEndListener()

}

}, [])

useEffect(() => {

tasksRef.current = tasks

}, [tasks])

…

Let’s try to remove an unnecessary task from the list.

Yes it seems to work, of course there are still some bugs and a lot of opportunities for optimization and improvements on user interaction. Maybe I will fix them later, maybe not. Most likely I’ll just add a button to enable and disable voice control.

So it seems we have more or less figured out WebSpeechApi.

And in the next article we will try to track the look and do something about it.

If you have any questions or suggestions please leave them in the comments.

The full code is available at GitHub