If you’re interested in Machine Learning and PHP, Transformers PHP emerges as a game-changer, offering robust text processing capabilities within PHP environments.

Transformers PHP simplifies both text and image processing tasks by harnessing pre-trained transformer models. It enables seamless integration of NLP functionalities for text and supports image-related tasks such as classification and object detection within PHP applications.

Transformers PHP is an open-source project. You can find more information and the source code on the GitHub repository.

Transformers PHP boasts a range of powerful features designed to enhance text and image processing capabilities within PHP environments:

Transformer Architecture: Inspired by Vaswani et al.’s “Attention is All You Need,” Transformers PHP leverages self-attention mechanisms for efficient text processing.

Natural Language Processing Applications: From translation to sentiment analysis, Transformers PHP caters to diverse NLP tasks with ease.

Image Applications: Transformers PHP supports image-related tasks such as classification and object detection within PHP applications.

Model Accessibility: Access a plethora of pre-trained models on platforms like Hugging Face, simplifying development without the need for extensive training.

Architecture Variety: Choose from architectures like BERT, GPT, or T5, each tailored for specific tasks, ensuring optimal performance.

Transformers PHP bridges the gap between PHP and advanced NLP, offering developers unparalleled opportunities to implement AI-driven solutions.

Transformers PHP and the ONNX Runtime

The backbone of Transformers PHP lies in its integration with the ONNX Runtime, a high-performance AI engine designed to execute deep learning models efficiently. Utilizing the Foreign Function Interface (FFI) mechanism, Transformers PHP seamlessly connects with the ONNX Runtime, enabling lightning-fast execution of transformer models within PHP environments.

So, what exactly is the ONNX Runtime? At its core, ONNX (Open Neural Network Exchange) is an open format for representing deep learning models, fostering interoperability between various frameworks. The ONNX Runtime, developed by Microsoft, is a cross-platform, high-performance engine built specifically for ONNX models. It provides robust support for executing neural network models efficiently across different hardware platforms, including CPUs, GPUs, and specialized accelerators.

The integration of ONNX Runtime into Transformers PHP via the FFI mechanism brings several key benefits:

Performance: ONNX Runtime is optimized for speed and efficiency, ensuring rapid inference of transformer models within PHP applications. This translates to faster response times and improved overall performance, crucial for real-time or high-throughput applications.

Hardware Acceleration: Leveraging the capabilities of ONNX Runtime, Transformers PHP can harness hardware acceleration features available on modern CPUs and GPUs. This allows for parallelized computation and optimized resource utilization, further enhancing performance.

Interoperability: By adhering to the ONNX format, ONNX Runtime ensures compatibility with a wide range of deep learning frameworks, including PyTorch and TensorFlow. This interoperability facilitates seamless integration of transformer models trained in different frameworks into Transformers PHP applications.

Scalability: ONNX Runtime is designed to scale efficiently across diverse hardware configurations, from single CPUs to large-scale distributed systems. This scalability ensures that Transformers PHP can handle varying workloads and adapt to evolving performance requirements.

In summary, the integration of the ONNX Runtime with Transformers PHP via the FFI mechanism unlocks a world of possibilities for AI-driven applications within the PHP ecosystem. Developers can leverage the power and versatility of transformer models with confidence, knowing that they are backed by a high-performance AI engine capable of delivering exceptional results.

Start using Transformers PHP

Before to start using Transformers PHP, I would like to ask you to “Star” the GitHub repository and follow the author of this great library Kyrian.

You can start creating a new directory and enter into the new empty directory.

cd example-app

You can install the package:

During the execution of the command, you will be asked whether to enable and run the composer plugin for ankane/onnxruntime package to download the ONNXRuntime binaries for PHP. My suggestion is to answer y (yes please):

In this way, composer will download and install all the dependencies in the vendor/ folder and will automatically download the ONNX runtime, so you have just to run composer require codewithkyrian/transformers

The ONNX Runtime Downloader plugin at the end, is very simple, it triggers automatically the download of the ONNX Runtime thanks to the ONNX Runtime PHP package.

Once you have installed the package, you can begin using it. You can create a new PHP file in which you include the autoload file, instantiate the Transformers class, and then initialize the pipeline with the desired functionality.

// 001 requiring the autoload file from vendor

require ‘./vendor/autoload.php’;

// 002 importing the Transformers class

use CodewithkyrianTransformersTransformers;

// 003 importing the pipeline function

use function CodewithkyrianTransformersPipelinespipeline;

// 004 initializing the Transformers class setting the cache directory for models

Transformers::setup()->setCacheDir(‘./models’)->apply();

// 005 initializing a pipeline for sentiment-analysis

$pipe = pipeline(‘sentiment-analysis’);

// 006 setting the list of sentences to analyze

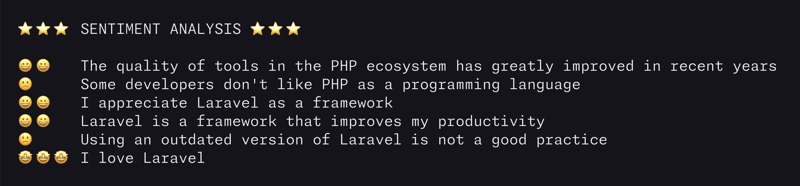

$feedbacks = [

‘The quality of tools in the PHP ecosystem has greatly improved in recent years’,

“Some developers don’t like PHP as a programming language”,

‘I appreciate Laravel as a framework’,

‘Laravel is a framework that improves my productivity’,

‘Using an outdated version of Laravel is not a good practice’,

‘I love Laravel’,

];

echo PHP_EOL.‘⭐⭐⭐ SENTIMENT ANALYSIS ⭐⭐⭐’.PHP_EOL.PHP_EOL;

// 007 looping thrgouh the sentences

foreach ($feedbacks as $input) {

// 008 calling the pipeline function

$out = $pipe($input);

// 009 using the output of the pipeline function

$icon =

$out[‘label’] === ‘POSITIVE’

? ($out[‘score’] > 0.9997

? ‘🤩🤩🤩’

: ‘😀😀 ‘)

: ‘🙁 ‘;

echo $icon.‘ ‘.$input.PHP_EOL;

}

echo PHP_EOL;

In the code sample:

001 requiring the autoload file from vendor;

002 importing the Transformers class;

003 importing the pipeline function;

004 initializing the Transformers class, setting the cache directory for models;

005 initializing a pipeline for sentiment-analysis;

006 setting the list of sentences to analyze;

007 looping through the sentences;

008 calling the pipeline function;

009 using the output of the pipeline function.

In the example we are using the sentiment analysis thanks to this line:

The pipeline() function has a mandatory parameter which is the task that defines which functionality will be used:

feature-extraction: feature extraction is a process in machine learning and signal processing where raw data is transformed into a set of meaningful features that can be used as input to a machine learning algorithm. These features are representations of specific characteristics or patterns present in the data that are relevant to the task at hand. Feature extraction helps to reduce the dimensionality of the data, focusing on the most important aspects and improving the performance of machine learning algorithms by providing them with more relevant and discriminative information. This process is commonly used in tasks such as image recognition, natural language processing, and audio signal processing.

sentiment-analysis: sentiment analysis is the process of determining and categorizing the emotional tone or sentiment expressed within a piece of text.

ner: NER stands for Named Entity Recognition, which is a natural language processing task that involves identifying and categorizing named entities within text into predefined categories such as names of persons, organizations, locations, expressions of times, quantities, monetary values, percentages, etc.

question-answering: question answering in machine learning is the task of automatically generating accurate responses to natural language questions posed by users based on a given context or knowledge base.

fill-mask: Fill-mask is a natural language processing task where a model is trained to predict a masked word or phrase in a sentence, often used in transformer-based language models like BERT for tasks such as text completion or filling in missing information.

summarization: summarization is the process of condensing a longer piece of text into a shorter version while retaining its key information and meaning.

translation: refers to the process of converting text from one language (xx) to another language (yy).

text-generation: Text generation is the automated process of producing coherent and contextually relevant textual content using machine learning models or algorithms.

This means that you can select one of the tasks mentioned above, and Transformers PHP will download (and cache) the appropriate model locally (according to the selected task). Once the model is downloaded (to the cache directory defined via the setCacheDir() method of the Transformer class), you can execute the script multiple times without an internet connection and without needing to call any APIs.

References

Transformers PHP: PHP is a toolkit for PHP developers to add machine learning capabilities to your PHP project;

ONNX Runtime with PHP: Run ONNX models in PHP

ONNX Runtime: ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator