1. Meet Llama3: Meta’s Game-Changing Language Model

Meta has just launched Llama3, their latest language model, and it’s quickly becoming the talk of the tech world. This release isn’t just an update — it introduces a suite of exciting features poised to revolutionize the field.

At vishwa.ai, our mission is clear: to deliver top-tier AI-driven automation for our customers. This means we’re constantly scouting for breakthrough technologies that can enhance the precision and efficiency of our services. Enter Llama3. We’ve begun exploring the potential of this new model and are eager to share our initial findings with you.

In this blog, you’ll get a sneak peek into our early experiences with Llama3. Our research team is rigorously testing the model across a variety of projects, and we plan to provide a detailed analysis of its performance in different scenarios soon. Stay tuned as we delve deeper into the capabilities of Llama3 and what it means for the future of AI automation.

2. Innovative Changes in Training Llama3

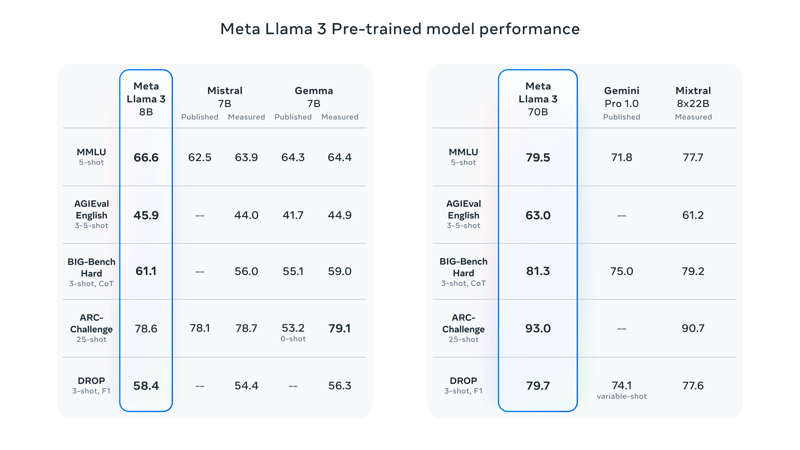

Meta has significantly enhanced the Llama3 over its predecessor, Llama2, introducing notable improvements in the model’s training processes and capabilities. Llama3 was released in 8B and 70B sizes, and meta claims that the respective models “are a major leap over Llama 2 and establish a new state-of-the-art for LLM models at those scales”.

Expanded Training Dataset: Llama3’s is trained on over 15 trillion tokens seven times more than Llama2. This expansion includes a fourfold increase in code-based data and over 5% of the content in high-quality multilingual formats across more than 30 languages, significantly enhancing its coding capabilities and global applicability.

Advanced Data Filtering: Meta has implemented sophisticated data-filtering pipelines to ensure high data quality for training. These pipelines include heuristic filters, NSFW filters, semantic deduplication, and text classifiers. Remarkably, Llama2 was used to generate data for these text-quality classifiers, embodying a unique self-improving process.

Optimized Data Mixing: Meta has perfected the art of data combination, creating an optimal mix that ensures Llama3 excels across various applications — from answering trivia questions and solving STEM problems to enhancing coding and enriching historical knowledge.

Enhanced Model Efficiency: To maximize the potential of its largest models, Meta employed a combination of data, model, and pipeline parallelization techniques. Coupled with improvements in GPU utilization and training infrastructure, these efforts have tripled the training efficiency compared to Llama2.

3. Human Evaluation of Llama3

To thoroughly assess the effectiveness of Llama3, Meta developed a new high-quality human evaluation set, consisting of 1,800 prompts across 12 essential use cases. These include asking for advice, brainstorming, classification, closed and open question answering, coding, creative writing, data extraction, role-playing specific characters or personas, reasoning, rewriting, and summarization.

To ensure the integrity of these evaluations and avoid any potential bias from overfitting, Meta took a precautionary measure: the model developers were not given access to the evaluation set. This strategic decision aimed to provide an unbiased assessment of Llama3’s performance.

The capabilities of Llama3 were then compared against other leading models like Claude Sonnet, Mistral Medium, and GPT-3.5. This benchmarking provided a clear snapshot of Llama3’s performance, showcasing its versatility and robustness across varied and complex scenarios, and highlighting its advancements over previous models in the field.

4. Exploring Llama3: The Foundation for usecase Specific AI Models

At vishwa.ai, we are currently exploring the potential of Llama3 as a foundational model. This model aligns with our commitment to developing specialized AI personas tailored to distinct use cases.

Llama3’s flexibility makes it an ideal core component for our systems, allowing our developers the freedom to shape applications that meet our clients’ specific goals.

As we delve deeper into this exploration, our focus is on rigorously testing and enhancing input and output safeguards for each AI persona. Our aim is to achieve maximum accuracy and effectiveness, ensuring that as we integrate Llama3, it enhances our capability to deliver precise and reliable AI-driven solutions tailored to the unique needs of businesses.

5. Safety & Guardrails

In our exploration of Llama3, we’ve been particularly attentive to how Meta approaches the model’s safety features. They utilize red-teaming, a robust testing method where both internal and external experts launch adversarial prompts at the model to test for vulnerabilities.

This extensive safety evaluation covers several key areas including Chemical, Biological, and Cyber Security, aiming to ensure the model’s resilience against potential misuse.

Additionally, the introduction of Llama Guard models adds an extra layer of security, designed to bolster response safety. These models aim to safeguard against misuse through enhanced command execution and protect against prompt injection attacks. From our perspective, these are critical advancements that could set a precedent for future AI deployments.

6. What’s on the Horizon for Meta’s Llama3? Key Developments to Watch

At vishwa.ai, we’re always tuned into the latest AI developments, and Meta’s ongoing updates to the Llama3 series are no exception. Currently offered in 8B and 70B model sizes, these are just the starting point for what’s shaping up to be an expansive range of options.

Upcoming Enhancements to Llama3

Meta is ambitiously expanding the Llama3 family, scaling up to models with over 400B parameters. These larger models are expected to introduce groundbreaking capabilities such as multimodality. This means they won’t just understand and generate text, they’ll also process other types of data like images and sounds, opening up new avenues for AI applications.

Moreover, these models are being designed to handle multiple languages and support significantly longer context windows, enhancing their ability to engage in more complex conversations and understand deeper contexts.

7. Our commitment to Cutting-Edge AI

As Meta continues to push the boundaries of what AI can do, we at vishwa.ai are keeping a close eye on these developments. Our goal is to integrate these innovations into our services, ensuring that our customers always have access to the most advanced AI technologies.

Stay connected with our blog for detailed analyses and insights as we explore how these new capabilities can transform industries and revolutionize the way businesses operate.