Table of Contents 📚

🌟 Introduction

💡 The Solution

🛠️ Understanding ThreadPool

💻 Implementing the ThreadPool Class from Scratch

🌐 Implementing ThreadPool in our Web Server

📊 Result Overview

In our previous discussion on TCP server design, we explored the concept of a multi-threaded server, where each incoming request spawns a new thread. While this method initially seems intuitive, it poses challenges in terms of scalability and resource management. Imagine thousands of threads being created simultaneously to handle thousands of requests; this could easily overwhelm system resources and degrade performance.

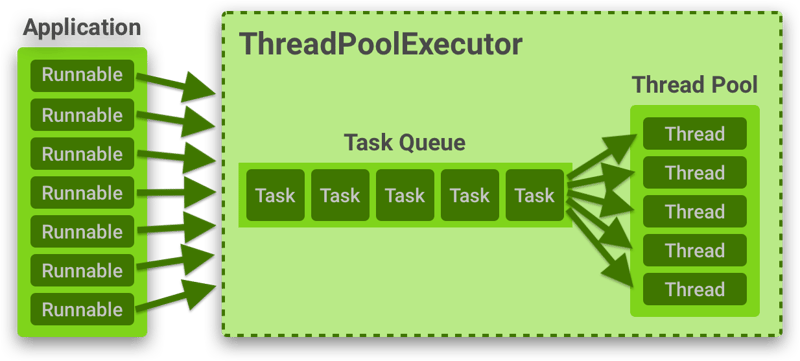

To address this issue, we introduce the concept of a ThreadPool. A ThreadPool maintains a predefined number of threads that can be reused to handle incoming requests. This approach optimizes resource usage by preventing the excessive creation of threads and effectively managing system resources.

A ThreadPool essentially consists of a collection of threads waiting to execute tasks. Instead of creating a new thread for each request, tasks are submitted to the ThreadPool, where available threads pick them up for execution. Once a thread completes its task, it becomes available to handle another incoming request.

🚀 Implementing the ThreadPool Class from Scratch

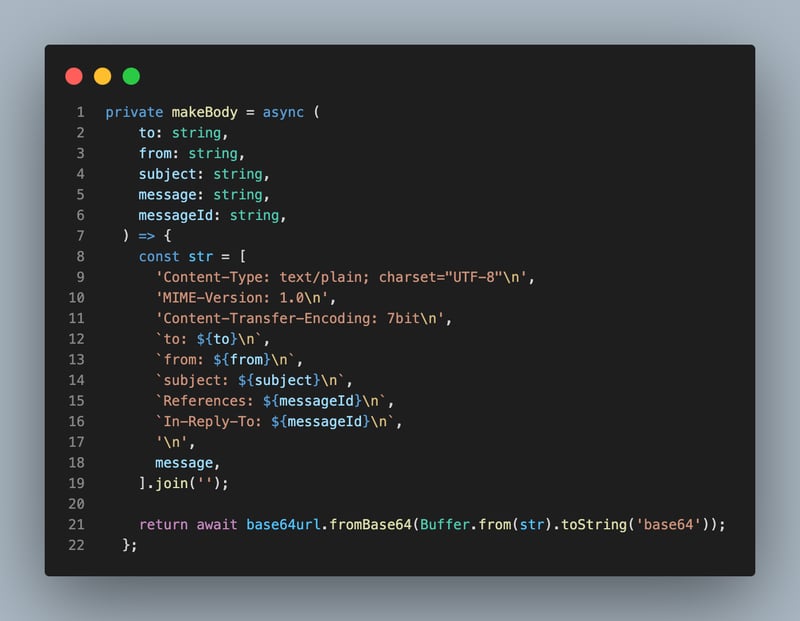

Creating a ThreadPool from scratch involves defining a mechanism to manage threads and execute tasks concurrently. Here’s how you can do it:

1. Define the ThreadPool Class:

ThreadPool maintain the List of task queue and Worker Threads.

The ThreadPool class consists of several components:

Task Queue (Blocking Queue): This contains the tasks. Tasks are added to this queue, and the workers (threads) pick them up and execute them.

List of ThreadPool Runnables: This contains the list of all available worker threads.

isStopped Flag: This flag is used to check if the ThreadPool is currently active or stopped. When the ThreadPool is stopped, it won’t accept any new tasks, but it will allow the existing tasks to finish.

Methods:

1. Constructor

import java.util.List;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

public class ThreadPool {

private BlockingQueue<Runnable> taskQueue;

private List<PoolThreadRunnable> runnables;

private boolean isStopped;

// Constructor for initializing the ThreadPool

public ThreadPool(int numberOfThreads, int taskQueueSize) {

this.taskQueue = new ArrayBlockingQueue<>(taskQueueSize);

this.runnables = new ArrayList<>();

this.isStopped = false;

// Create and start threads in the pool

for (int i = 0; i < numberOfThreads; i++) {

PoolThreadRunnable runnable = new PoolThreadRunnable(taskQueue);

runnables.add(runnable);

new Thread(runnable).start();

}

}

2. Method to Submit a Task for Execution

This method allows users to submit a task to the ThreadPool for execution. It adds the task to the task queue for processing by the ThreadPool.

public synchronized void execute(Runnable task) {

if (isStopped) {

throw new IllegalStateException(“ThreadPool is stopped”);

}

taskQueue.offer(task);

}

3. Method to Stop the ThreadPool

This method stops the ThreadPool by setting the isStopped flag to true. It then stops all worker threads in the ThreadPool.

public synchronized void stop() {

isStopped = true;

for (PoolThreadRunnable runnable : runnables) {

runnable.stop();

}

}

4.Method to Wait Until All Tasks are Finished

This method blocks until all tasks in the task queue are finished processing. It continuously checks if the task queue is empty and sleeps for a short duration if it’s not.

public synchronized void waitUntilAllTasksFinished() {

while (!taskQueue.isEmpty()) {

try {

Thread.sleep(2);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

}

}

2. Define the PoolThreadRunnable Class

The PoolThreadRunnable class implements the Runnable interface and represents the worker threads in the ThreadPool. Each worker thread continuously takes tasks from the task queue and executes them.

Methods:

1. Constructor for PoolThreadRunnable

The constructor initializes a PoolThreadRunnable instance with a task queue.

this.taskQueue = taskQueue;

}

2. Run Method for PoolThreadRunnable

The run method is overridden from the Runnable interface. It defines the behaviour of the worker thread when it is started.

public void run() {

currentThread = Thread.currentThread();

while (!isStopped) {

try {

Runnable runnable = taskQueue.take();

System.out.println(“Starting : “ + currentThread.getName());

runnable.run();

} catch (InterruptedException e) {

// Handle InterruptedException

}

}

}

3. Stop Method for PoolThreadRunnable

The stop method is used to stop the execution of the worker thread.

isStopped = true;

System.out.println(“Stopping : “ + currentThread.getName());

this.currentThread.interrupt();

}

🌐 Implementing ThreadPool in our Web Server

In the context of our web server implementation, we’ll integrate the ThreadPool to handle incoming client connections. Here’s how we’ll do it:

Initialization socket: Initialise the socket to listen to port 1234.

Initialization ThreadPool: Initialize the ThreadPool with a predetermined number of threads and task queue size. ex.(2,10)

Accepting Connections: Will accept the client connection.

Task Execution: When a client connects to the server, instead of creating a new thread for each connection, we’ll submit the client handling task to the ThreadPool. The ThreadPool will manage the execution of tasks, ensuring that they are picked up by available threads for processing.

By implementing a ThreadPool in our web server, we balance concurrency and resource utilization, resulting in a more scalable and efficient server architecture.

Our system handles 4 requests coming from different clients at the same time using only 2 threads. This means that each thread is responsible for handling multiple requests, showing efficient resource usage.

Here, you can see the responses our system generates for each incoming request. It gives a clear picture of how well our system handles multiple clients simultaneously.

Task-wise Thread Execution:

In the image below, you’ll notice that despite there being 4 requests, only 2 threads are used to handle them. This demonstrates that our system effectively reuses threads, making the most out of limited resources.

This setup ensures that our system operates efficiently and can manage incoming requests smoothly while optimizing resource utilization.

🌟 Thank you for reading! I appreciate your time and hope you found the article helpful. 📚 I’m open to suggestions and feedback, so feel free to reach out. Let’s keep exploring together! 🚀